BigQuery Editions pricing in practice, late July 2023

BigQuery is a fabulously capable "cloud native" data warehouse product built by our friends at Google Cloud Platform, GCP. It is the foundation of our entire data ecosystem at my current employer and my name has been on the dotted line as internal owner for a few years now. Because our FinOps tooling stack is the same as our internal BI stack, this means that BigQuery (henceforth BQ) is also the foundation of our FinOps data and visibility tooling effort as well.

We have tinkered with various flavors of controlling BQ costs since early 2022 when the Data function began to get some traction here, when we were using their standard on-demand pricing. I am here today to talk about recent innovations to their pricing model and will (try to) save historical story telling for another post.

TLDR - It's a net positive, with caveats.

The good - physical storage pricing

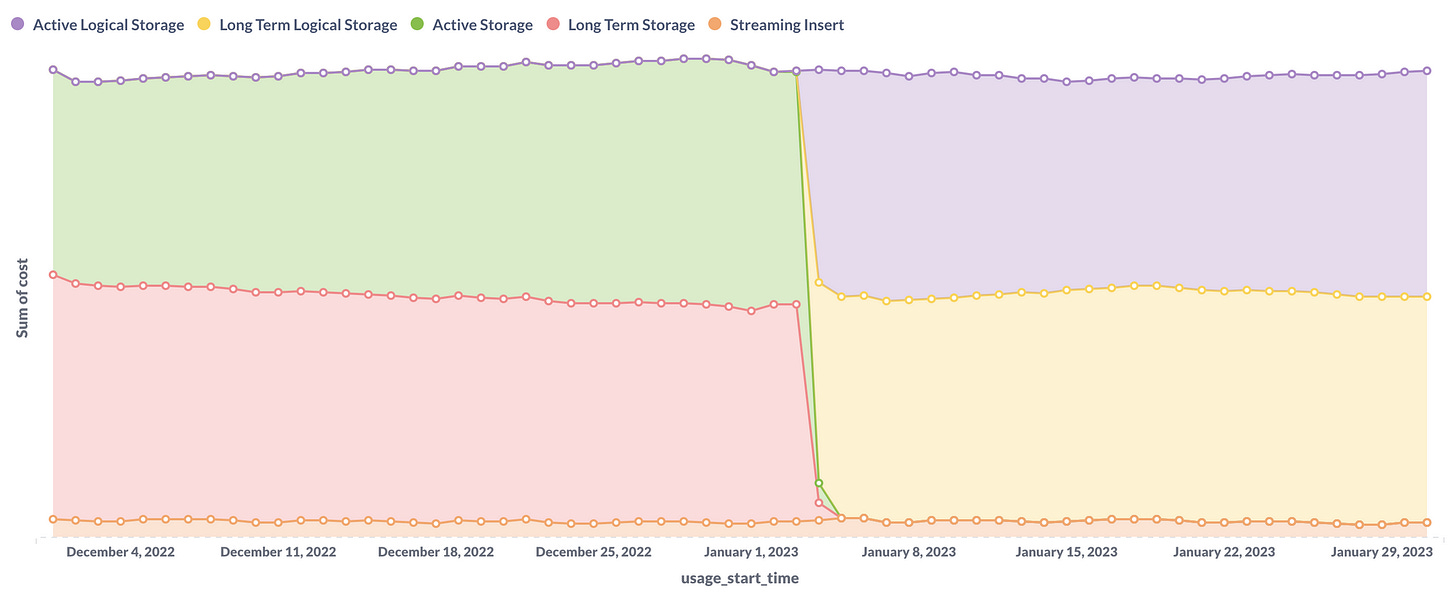

So perhaps you noticed this little SKU switcheroo at the beginning of this year:

This is a view from Metabase, our BI dashboard tool out of which we build our cost reporting. In early January this year, the BQ team suddenly got rid of 2 SKUs: Active Storage and Long Term Storage, and replaced them with Active Logical Storage and Long Term Logical Storage. The meaning of this wasn't explained at the time, but this was the setup for some announcements early this year that they'd be introducing a new storage class - physical storage.

💡

The summary of physical storage is that it really should be called "compressed storage" and is billed at 2x the rate of logical storage after compression.

Whereas logical storage is billed at $X/GB/mo, physical storage is billed at $2X/GB/mo but physical storage measures (I presume) the actual physical size of the data on disk after compression whereas logical must measure the uncompressed size. BQ very helpfully gives you a clue in the details page of a given table, the same section where other table metadata is displayed.

Detailed BQ storage pricing page here.

Here is a query that you can run from the BQ UI in each of your projects where you have storage enough to care about this topic. This runs at the project level, so be mindful of line 18 where you choose your region, and then probably export the results to a G Sheet for actually sharing around. So far these estimates seem within reality for us. Now to that reality.

We are one of Google's petabyte scale customers as all of our platform events flow constantly into BigQuery. Your mileage will most certainly vary, but after several months of waiting for this improvement, I am happy to report that for highly compressable datasets in BigQuery physical storage pricing is an absolute win.

The bad - goodbye to flat rate query pricing

Most of what has been marketed about BigQuery Editions is on this side of the house. They now market "autoscaling", presumably because suits with little to no infrastructure experience absolutely love the sound of that word. This is a shot from Google's internal customer marketing about what the autoscaling experience looks like:

Now, do your data warehouse workloads actually look like this? Because ours look like this:

Maybe the marketing version is over a 60 second timeframe, I don't know. The autoscaler is supposed to work in 1 minute increments, and granted we have a pretty busy warehouse, but the "capacity we're using" and the "capacity we're being charged for" do not fit each other nearly as neatly in the marketing. Possibly the granularity of this report over several hours is not tight enough to let me see the prettier truth, but to find this out I have to go build my own reporting out of the INFORMATION SCHEMA.

The capacity that we've reserved is 100 "baseline slots" with a max of 400 "autoscaled slots". I think this means that we're being charged for 100 slots no matter what we we do with them - your standard reserved capacity model - but that as the autoscaler detects whatever it detects, it will scale our billed-for capacity up to 400 slots - your standard on demand model. In other words, it is the worst of both worlds: we are back to a highly variable cost structure but with a maximum capacity cap in place that ensures that I have to care about both unpredictable costs and poor performance. In all the previous models it was either one or the other, now it's both.

And frankly, the autoscaler doesn't look very good as it autoscales your costs in a way that appears to only somewhat correlate with your actual usage per their reporting.

Here's how it's gone for the year so far:

That big middle finger on the right side? That was a Materialized View that kept failing for a week before I noticed it. If we were on demand it wouldn't even have caused that, as query failures don't register on the TB-billed side of things. It truly is a bummer. Granted, we are not doing any long term commitments yet, primarily because I have no idea how they work. You reserve in chunks of 100 slots, so does that mean that to cover my 400-max workload I have to reserve 400 slots and they are always on and we're back to flat rate pricing at a vastly higher rate? In fairness, I haven't asked our GCP reps yet but I don't know as of today.

In conclusion

The moves that the BigQuery pricing team have made this year have mostly been a net positive for us, as we are heavy users of BQ and store a lot of data there. The new physical storage pricing has been a huge savings for us, but the new Editions pricing on the compute side of the house is pretty annoying. I was blessed with being able to ignore compute costs for the most part for a little bit, but not anymore. Here's the full story from Q2 - present:

Thanks readers, and hopefully this can help some of you better understand these new BigQuery pricing changes for your org.